Can we use Word2vec for sentiment analysis

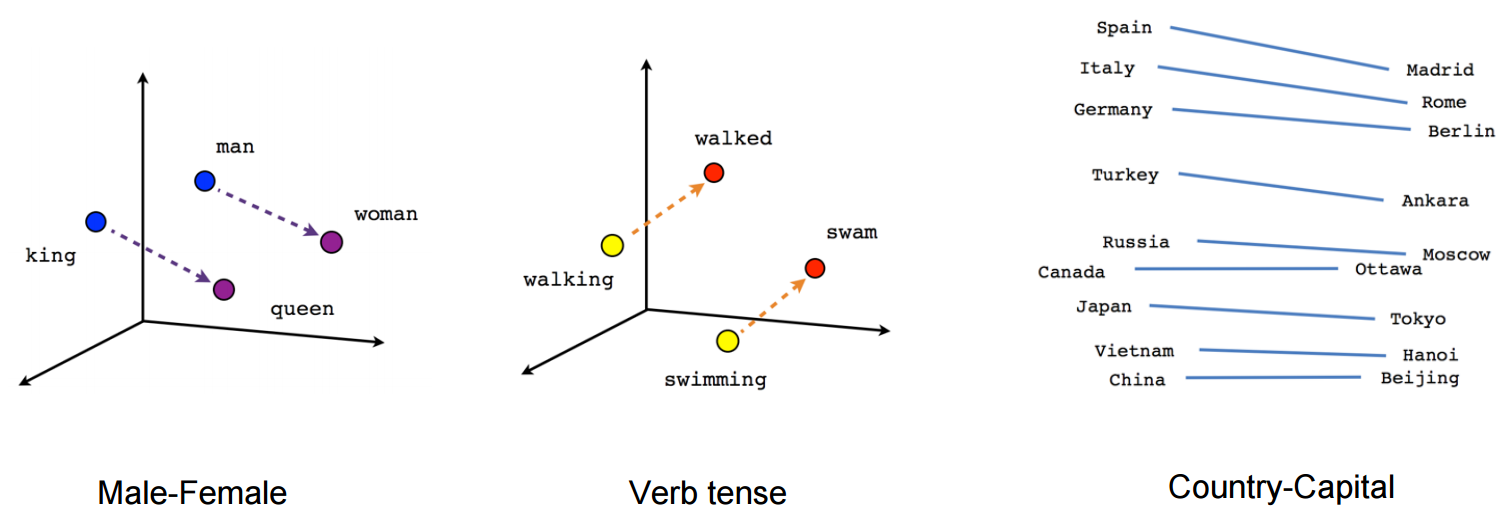

It's clear from the above examples that Word2Vec is able to learn non-trivial relationships between words. This is what makes them powerful for many NLP tasks, and in our case sentiment analysis.

What algorithms are used in Word2vec

Word2Vec can use either of two algorithms: CBOW (continuous bag of words) or Skip-Gram. We will only use the Skip-Gram neural network algorithm in this article. This algorithm uses a center word to predict the probability of each word in the vocabulary V being a context word within a chosen window size.

Is Bert better than Word2vec

Difference between word2vec and BERT:

Vectors: Word2vec saves one vector representation of a word, whereas BERT generates vector for a word based on how the word is being in phrase or a sentence.

Where can I use Word2vec

Using Word2vec, one can find similar words, dissimilar words, dimensional reduction, and many others. Another important feature of Word2vec is to convert the higher dimensional representation of the text into lower dimensional of vectors.

Can we use SVM for sentiment analysis

This paper introduces an approach to sentiment analysis which uses support vector machines (SVMs) to bring together diverse sources of po- tentially pertinent information, including several fa- vorability measures for phrases and adjectives and, where available, knowledge of the topic of the text.

What is the downside of Word2Vec

Word2vec ChallengesInability to handle unknown or OOV words.No shared representations at sub-word levels.Scaling to new languages requires new embedding matrices.Cannot be used to initialize state-of-the-art architectures.

What is the limitation of Word2Vec algorithm

Perhaps the biggest problem with word2vec is the inability to handle unknown or out-of-vocabulary (OOV) words. If your model hasn't encountered a word before, it will have no idea how to interpret it or how to build a vector for it. You are then forced to use a random vector, which is far from ideal.

Is Word2Vec self supervised learning

Self-supervised learning is a type of supervised learning where the training labels are determined by the input data. word2vec and similar word embeddings are a good example of self-supervised learning.

What are the disadvantages of Word2Vec

Perhaps the biggest problem with word2vec is the inability to handle unknown or out-of-vocabulary (OOV) words. If your model hasn't encountered a word before, it will have no idea how to interpret it or how to build a vector for it. You are then forced to use a random vector, which is far from ideal.

Is Word2Vec better than Tfidf

TF-IDF model's performance is better than the Word2vec model because the number of data in each emotion class is not balanced and there are several classes that have a small number of data. The number of surprised emotions is a minority of data which has a large difference in the number of other emotions.

Can you use word embeddings with SVM

A framework in which sentiment analysis is done by using the proposed word embedding and feature reduction techniques. Word embedding is a technique in which low-dimensional vector representation of words is provided. Feature reduction method employs a support vector machine (SVM) classifier.

What are the limitations of Word2Vec

Perhaps the biggest problem with word2vec is the inability to handle unknown or out-of-vocabulary (OOV) words. If your model hasn't encountered a word before, it will have no idea how to interpret it or how to build a vector for it. You are then forced to use a random vector, which is far from ideal.

Can SVM be used in NLP

Moreover, since the SVM is usually used as binary classifier for solving an NLP problem and an NLP problem in most case is equivalent to a multi-class classification problem, we need to transform the multi-class problem into binary classification problems. Several methods have been proposed for the transformation.

Why is SVM not good for text classification

It is effective with more dimensions than samples. It works well when classes are well separated. SVM is a binary model in its conception, although it could be applied to classifying multiple classes with very good results. The training cost of SVM for large datasets is a handicap.

Should I use GloVe or Word2vec

The vector representation of the words is also different between Word2vec and GloVe. Word2vec creates vectors that represent the context of words, while GloVe creates vectors that represent the co-occurrence of words.

Can Word2vec overfit

In this sense, Word2Vec is actually trying to fit exactly, so it can't over-fit.

What is the problem with Word2Vec

One of the main issues with Word2Vec is its inability to handle unknown or out-of-vocabulary words. If Word2Vec has not encountered a term before, it cannot create a vector for it and instead assigns a random vector, which is not optimal.

Is Word2Vec supervised or unsupervised

Word2Vec is another unsupervised, self-taught learning example.

Is Word2Vec unsupervised or self-supervised

Self-supervised learning is a type of supervised learning where the training labels are determined by the input data. word2vec and similar word embeddings are a good example of self-supervised learning.

Can Word2Vec overfit

In this sense, Word2Vec is actually trying to fit exactly, so it can't over-fit.

Is Word2Vec better than GloVe

In the practice, Word2Vec employs negative sampling by converting the softmax function as the sigmoid function. This conversion results in cone-shaped clusters of the words in the vector space while GloVe's word vectors are more discrete in the space which makes the word2vec faster in the computation than the GloVe.

Why word embedding is better than TF-IDF

The word embedding method contains a much more 'noisy' signal compared to TF-IDF. A word embedding is a much more complex word representation and carries much more hidden information. In our case most of that information is unnecessary and creates false patterns in our model.

Is Word2vec self supervised learning

Self-supervised learning is a type of supervised learning where the training labels are determined by the input data. word2vec and similar word embeddings are a good example of self-supervised learning.

What is the weakness of word embedding

Historically, one of the main limitations of static word embeddings or word vector space models is that words with multiple meanings are conflated into a single representation (a single vector in the semantic space). In other words, polysemy and homonymy are not handled properly.

What are the disadvantages of word embedding

Some of the most significant limitations of word embeddings are: Limited Contextual Information: Word embeddings are limited in their ability to capture complex semantic relationships between words, as they only consider the local context of a word within a sentence or document.