Can a web crawler collect all pages on the web

Because it is not possible to know how many total webpages there are on the Internet, web crawler bots start from a seed, or a list of known URLs. They crawl the webpages at those URLs first. As they crawl those webpages, they will find hyperlinks to other URLs, and they add those to the list of pages to crawl next.

Can we crawl any website

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. Startups love it because it's a cheap and powerful way to gather data without the need for partnerships.

How to crawl data from website using Python

To extract data using web scraping with python, you need to follow these basic steps:Find the URL that you want to scrape.Inspecting the Page.Find the data you want to extract.Write the code.Run the code and extract the data.Store the data in the required format.

Does Google crawl all websites

Like all search engines, Google uses an algorithmic crawling process to determine which sites, how often, and what number of pages from each site to crawl. Google doesn't necessarily crawl all the pages it discovers, and the reasons why include the following: The page is blocked from crawling (robots.

Does Google crawl all pages

Google's crawlers are also programmed such that they try not to crawl the site too fast to avoid overloading it. This mechanism is based on the responses of the site (for example, HTTP 500 errors mean "slow down") and settings in Search Console. However, Googlebot doesn't crawl all the pages it discovered.

How do I scrape an entire website

There are roughly 5 steps as below:Inspect the website HTML that you want to crawl.Access URL of the website using code and download all the HTML contents on the page.Format the downloaded content into a readable format.Extract out useful information and save it into a structured format.

Can you get banned for web scraping

The number one way sites detect web scrapers is by examining their IP address, thus most of web scraping without getting blocked is using a number of different IP addresses to avoid any one IP address from getting banned.

How do I crawl all pages of a website in Python

Import necessary modules. import requests from bs4 import BeautifulSoup from tqdm import tqdm import json.Write a function for getting the text data from a website url.Write a function for getting all links from one page and store them in a list.Write a function that loops over all the subpages.Create the loop.

How do I crawl multiple websites in Python

The method goes as follows:Create a “for” loop scraping all the href attributes (and so the URLs) for all the pages we want.Clean the data and create a list containing all the URLs collected.Create a new loop that goes over the list of URLs to scrape all the information needed.

How do you crawl a website

The six steps to crawling a website include:Understanding the domain structure.Configuring the URL sources.Running a test crawl.Adding crawl restrictions.Testing your changes.Running your crawl.

Why did Google stop crawling my site

Did you recently create the page or request indexing It can take time for Google to index your page; allow at least a week after submitting a sitemap or a submit to index request before assuming a problem. If your page or site change is recent, check back in a week to see if it is still missing.

Does Google crawl URLs

If those links are in the text-only cache, Google can crawl them. Beyond text-only, Google Cache contains the indexed version of a page. It's a handy way of identifying missing elements on the mobile version. Many search optimizers ignore Google Cache.

Does Google crawl hidden links

Google renders the web page to approximate what a user might see. If content is hidden behind a “read more” link to make the content visible on the page, then that's okay. If a user can see it then Google can see it too. Google views web pages as a user does.

Is web scraping easy

However, web scraping might seem intimidating for some people. Specially if you've never done any coding in your life. However, they are way simpler ways to automate your data gathering process without having to write a single line of code.

How do I scrape data from multiple websites

How to Scrape Multiple Web Pages Using PythonSeek permission before you scrape a site.Read and understand the website's terms of service and robots. txt file.Limit the frequency of your scraping.Use web scraping tools that respect website owners' terms of service.

Do hackers use web scraping

A scraping bot can gather user data from social media sites. Then, by scraping sites that contain addresses and other personal information and correlating the results, a hacker could engage in identity crimes like submitting fraudulent credit card applications.

Can websites tell if you scrape them

Web pages detect web crawlers and web scraping tools by checking their IP addresses, user agents, browser parameters, and general behavior. If the website finds it suspicious, you receive CAPTCHAs and then eventually your requests get blocked since your crawler is detected.

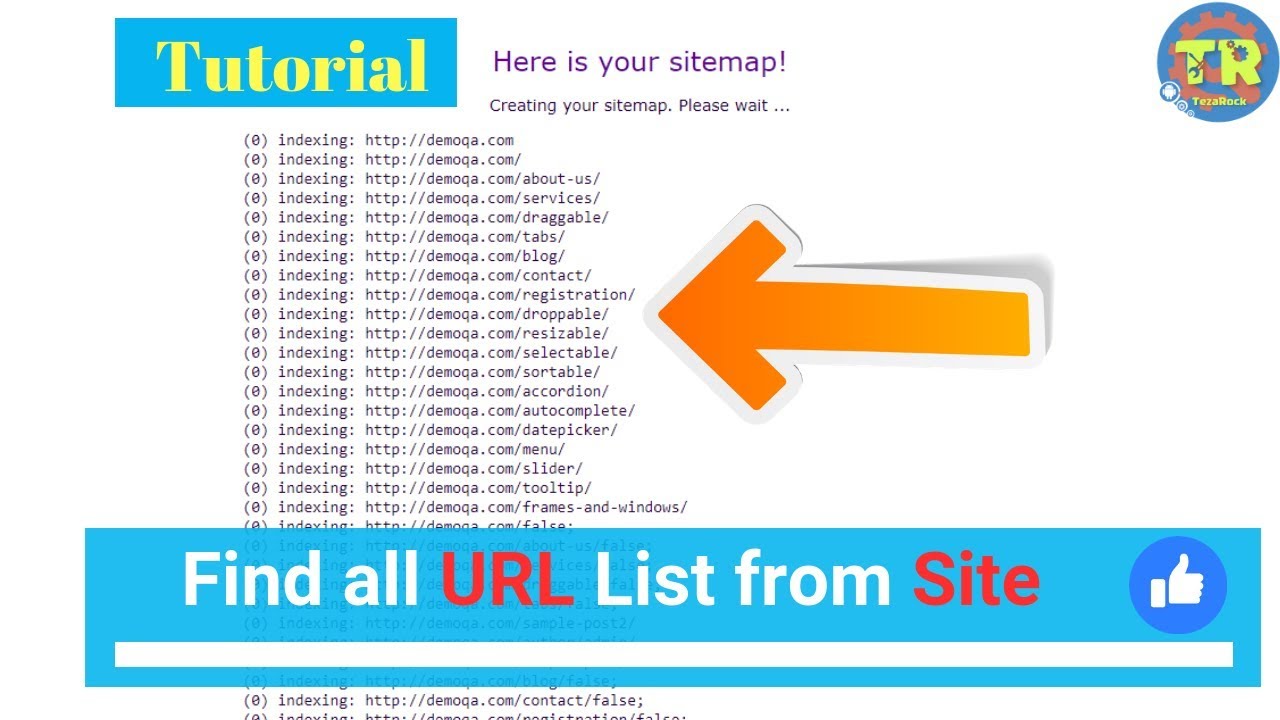

How do I extract all pages from a website

How to extract all URLs from a webpageStep 1: Run JavaScript code in Google Chrome Developer Tools. Open Google Chrome Developer Tools with Cmd + Opt + i (Mac) or F12 (Windows).Step 2: Copy-paste exported URLs into a CSV file or spreadsheet tools.Step 3: Filter CSV data to get relevant links.

Can Google crawl a site

Once Google discovers a page's URL, it may visit (or "crawl") the page to find out what's on it. We use a huge set of computers to crawl billions of pages on the web. The program that does the fetching is called Googlebot (also known as a crawler, robot, bot, or spider).

How do I force Google to crawl

Here's Google's quick two-step process:Inspect the page URL. Enter in your URL under the “URL Prefix” portion of the inspect tool.Request reindexing. After the URL has been tested for indexing errors, it gets added to Google's indexing queue.

Does Google crawl every website

Google's crawlers are also programmed such that they try not to crawl the site too fast to avoid overloading it. This mechanism is based on the responses of the site (for example, HTTP 500 errors mean "slow down") and settings in Search Console. However, Googlebot doesn't crawl all the pages it discovered.

How do I make my links crawlable

Make your links crawlable.Anchor text placement.Write good anchor text.Internal links: cross-reference your own content.External links: link to other sites.

How do you check if the URL is crawled

Enter the URL of the page or image to test and click Test URL. In the results, expand the "Crawl" section. You should see the following results: Crawl allowed – Should be "Yes".

Can I scrape multiple websites at once

The process presented above is excellent, but what if you need to scrape multiple sites and don't know their page numbers You'll have to go through each URL one by one and manually develop a script for each one. You could create a list of these URLs and loop through them instead.

Can you get IP banned for web scraping

Having your IP address(es) banned as a web scraper is a pain. Websites blocking your IPs means you won't be able to collect data from them, and so it's important to any one who wants to collect web data at any kind of scale that you understand how to bypass IP Bans.