Can you extract data from the entire web

Using a web scraping API can be a convenient way to extract data from a website, as the data is already organized and ready for use. However, not all websites offer APIs, and those that do may have restrictions on how the data can be used.

Can you pull data from a website without an API

Use web scrapers

If you need data from a popular website such as Amazon or Twitter, which you may save time by using already available web scraper solutions instead of getting API access.

How do I extract all data

There are three steps in the ETL process:Extraction: Data is taken from one or more sources or systems.Transformation: Once the data has been successfully extracted, it is ready to be refined.Loading: The transformed, high quality data is then delivered to a single, unified target location for storage and analysis.

How do I scrape data from a website without coding

ParseHub is a free cloud-based web scraping tool that makes it easy for users to extract online data from websites, whether static or dynamic. With its simple point-and-click interface, you can start selecting the data you want to extract without any coding required.

Is API better than web scraping

Additionally, APIs provide access to a limited set of data, whereas web scraping allows for a wider range of data collection. Web Scraping might require intense data cleaning while parsing the data but when you access an API you get data in a machine-readable format.

What is full data extraction

Full extraction involves extracting all the data from the source system and loading it into the target system. This process is typically used when the target system is being populated for the first time. Incremental stream extraction involves extracting only the data that has changed since the last extraction.

How do I get data from a URL

How to Access Data From a URL Using JavaCreate a URLConnectionReader class.Now, create a new URL object and pass the desired URL that we want to access.Now, using this url object, create a URLConnection object.Use the InputStreamReader and BufferedReader to read from the URL connection.

How do I scrape data from a public website

The web scraping processIdentify the target website.Collect URLs of the pages where you want to extract data from.Make a request to these URLs to get the HTML of the page.Use locators to find the data in the HTML.Save the data in a JSON or CSV file or some other structured format.

Is web scraping API legal

United States: There are no federal laws against web scraping in the United States as long as the scraped data is publicly available and the scraping activity does not harm the website being scraped.

Is Python best for web scraping

Python is an excellent choice for developers for building web scrapers because it includes native libraries designed exclusively for web scraping. Easy to Understand- Reading a Python code is similar to reading an English statement, making Python syntax simple to learn.

How do I capture data from a website

There are roughly 5 steps as below:Inspect the website HTML that you want to crawl.Access URL of the website using code and download all the HTML contents on the page.Format the downloaded content into a readable format.Extract out useful information and save it into a structured format.

How do you extract big data

5 Steps to Collect Big DataStep 1: Gather data. There are many ways to gather data according to different purposes.Step 2: Store data. After gathering the data, you can put the data into databases or storage for further processing.Step 3: Clean data.Step 4: Reorganize data.Step 5: Verify data.

How do I copy the contents of a URL

After the address is highlighted, press Ctrl + C or Command + C on the keyboard to copy it. You can also right-click any highlighted section and choose Copy from the drop-down menu. Once the address is copied, paste that address into another program by clicking a blank field and pressing Ctrl + V or Command + V .

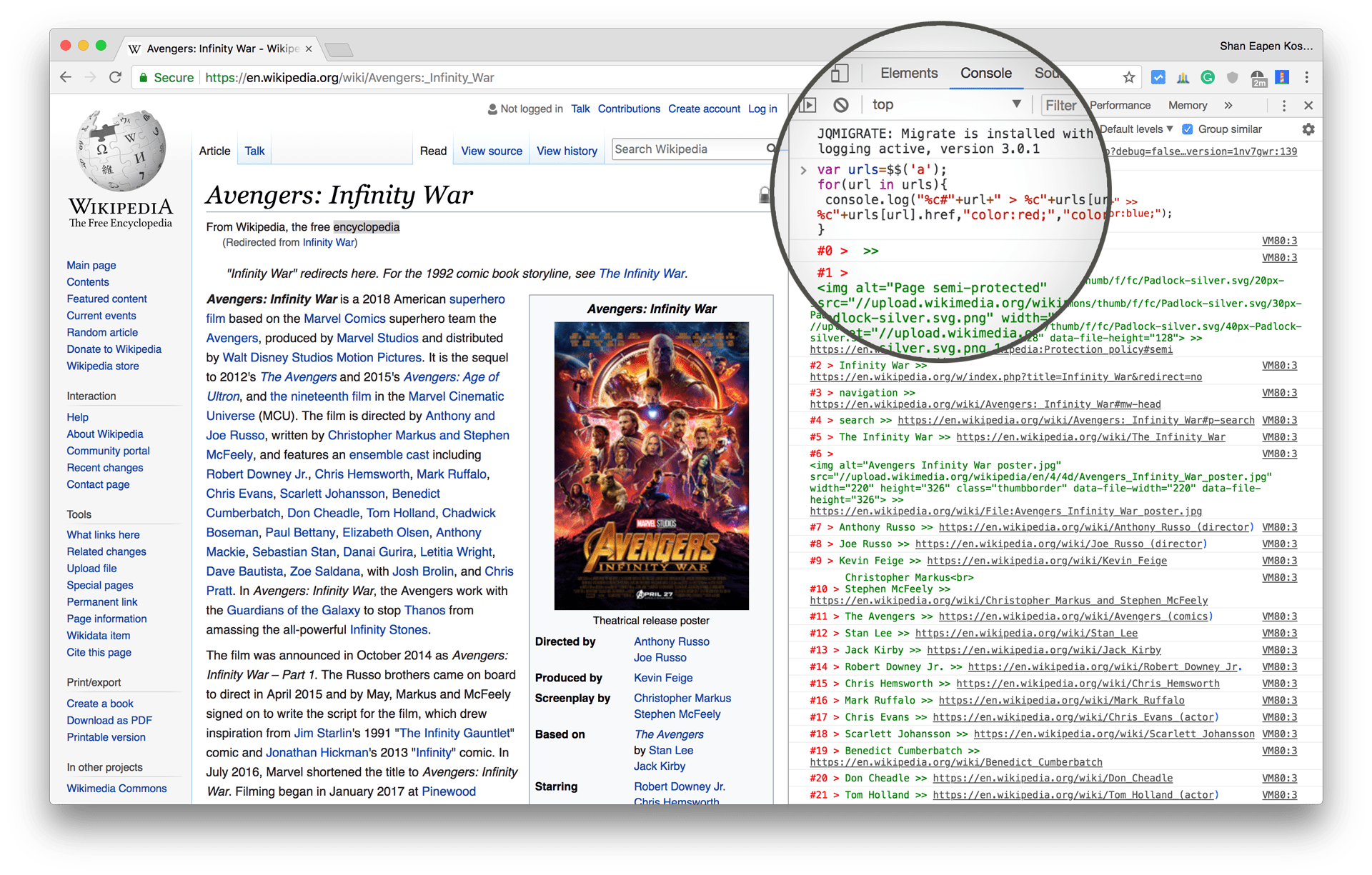

How do I scrape all URL from a website

How to extract all URLs from a webpageStep 1: Run JavaScript code in Google Chrome Developer Tools. Open Google Chrome Developer Tools with Cmd + Opt + i (Mac) or F12 (Windows).Step 2: Copy-paste exported URLs into a CSV file or spreadsheet tools.Step 3: Filter CSV data to get relevant links.

Is it legal to scrape RSS feed

RSS scrapers grab content from any website and use it for their own search engine gains. This type of spam is among the most detested in the Internet. Illegal RSS feed scrapers can be a real problem for webmasters and bloggers so it is essential to ensure that your content is protected.

How can I extract content from a website for free

Use Nanonets' web scraper tool to convert any webpage to editable text in 3 simple steps. Extract images, tables, text and more with our free web scraping tool. This tool extracts text from any webpage and provides you with well formatted output in the form of a downloadable . txt file.

Can you get IP banned for web scraping

Having your IP address(es) banned as a web scraper is a pain. Websites blocking your IPs means you won't be able to collect data from them, and so it's important to any one who wants to collect web data at any kind of scale that you understand how to bypass IP Bans.

Do hackers use web scraping

A scraping bot can gather user data from social media sites. Then, by scraping sites that contain addresses and other personal information and correlating the results, a hacker could engage in identity crimes like submitting fraudulent credit card applications.

Is web scraping easier with Java or Python

Short answer: Python!

If you're scraping simple websites with a simple HTTP request. Python is your best bet. Libraries such as requests or HTTPX makes it very easy to scrape websites that don't require JavaScript to work correctly. Python offers a lot of simple-to-use HTTP clients.

Is web scraping easy or not

A web scraper automates the process of extracting information from other websites, quickly and accurately. The data extracted is delivered in a structured format, making it easier to analyze and use in your projects. The process is extremely simple and works by way of two parts: a web crawler and a web scraper.

How do I extract text from a website in Excel

Using Excel Web Queries

Step 1: Create a new Workbook. Step 3: Enter the URL in the "From Web" dialog box. Step 4: Click "OK" button to load the webpage into the "Navigator" window. Step 5: Select the table or data you want to scrape by checking the box next to it.

How do I copy data from a website to Excel

Copy Text. Simply highlight the text you want to want to copy from the internet and type Ctrl+C to copy it into your clipboard. Then use the Ctrl+V command to paste the text into a cell of your choosing in your Excel spreadsheet. The pasted text will retain the formatting from the website.

How do I Copy an entire content

1) Navigate to a page or tab. 2) Click command + A or control + A on your keyboard to select all. If you're on a cover page, make sure you are clicking in the content. 3) You'll see a notification pop up asking if you are trying to copy and paste all page content and widgets.

How do I collect data from a URL

How to Access Data From a URL Using JavaCreate a URLConnectionReader class.Now, create a new URL object and pass the desired URL that we want to access.Now, using this url object, create a URLConnection object.Use the InputStreamReader and BufferedReader to read from the URL connection.

How do I extract text from multiple URLs in Python

The method goes as follows:Create a “for” loop scraping all the href attributes (and so the URLs) for all the pages we want.Clean the data and create a list containing all the URLs collected.Create a new loop that goes over the list of URLs to scrape all the information needed.