Is My website Crawlable

There are several factors that affect crawlability, including the structure of your website, internal link structure, and the presence of robots. txt files. In order to ensure that your website is crawlable, you need to make sure that these factors are taken into account.

How often is my website crawled

It's a common question in the SEO community and although crawl rates and index times can vary based on a number of different factors, the average crawl time can be anywhere from 3-days to 4-weeks.

What does Google use to crawl a website

Googlebot

"Crawler" (sometimes also called a "robot" or "spider") is a generic term for any program that is used to automatically discover and scan websites by following links from one web page to another. Google's main crawler is called Googlebot.

What is a crawler search engine

A web crawler, spider, or search engine bot downloads and indexes content from all over the Internet. The goal of such a bot is to learn what (almost) every webpage on the web is about, so that the information can be retrieved when it's needed.

How do I stop my website from being crawled

Use Robots.

Robots. txt is a simple text file that tells web crawlers which pages they should not access on your website. By using robots. txt, you can prevent certain parts of your site from being indexed by search engines and crawled by web crawlers.

What does it mean when a URL is crawled

Crawling is the process of finding new or updated pages to add to Google (Google crawled my website). One of the Google crawling engines crawls (requests) the page. The terms "crawl" and "index" are often used interchangeably, although they are different (but closely related) actions.

How do I get my website crawled

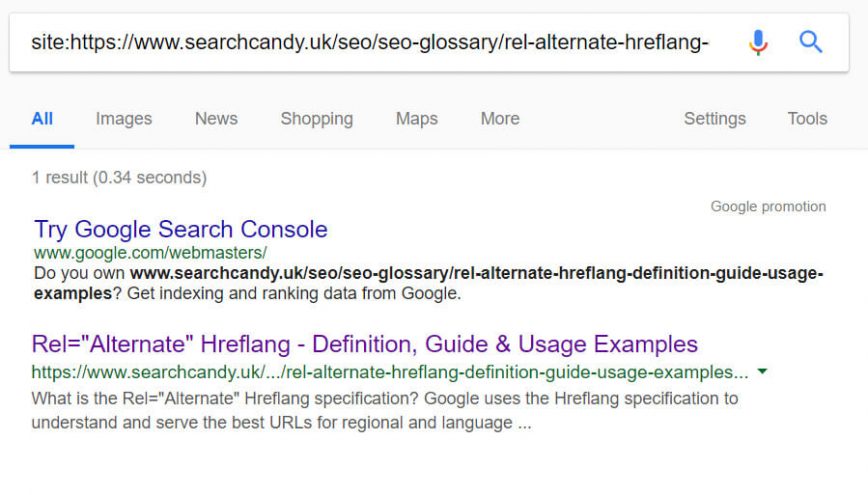

Use the URL Inspection tool (just a few URLs)

To request a crawl of individual URLs, use the URL Inspection tool. You must be an owner or full user of the Search Console property to be able to request indexing in the URL Inspection tool.

What is an example of crawling a website

Some examples of web crawlers used for search engine indexing include the following: Amazonbot is the Amazon web crawler. Bingbot is Microsoft's search engine crawler for Bing. DuckDuckBot is the crawler for the search engine DuckDuckGo.

What is an example of a web crawler

Examples of web crawlers

Amazonbot is the Amazon web crawler. Bingbot is Microsoft's search engine crawler for Bing. DuckDuckBot is the crawler for the search engine DuckDuckGo. Googlebot is the crawler for Google's search engine.

Is it legal to crawl data

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. Startups love it because it's a cheap and powerful way to gather data without the need for partnerships.

How do I block Google crawler

Use a robots.txt file to manage crawl traffic, and also to prevent image, video, and audio files from appearing in Google search results. This won't prevent other pages or users from linking to your image, video, or audio file. Read more about preventing images from appearing on Google.

How do I stop Google from crawling my URL

noindex is a rule set with either a <meta> tag or HTTP response header and is used to prevent indexing content by search engines that support the noindex rule, such as Google.

Is it illegal to crawl a website

Web scraping is completely legal if you scrape data publicly available on the internet. But some kinds of data are protected by international regulations, so be careful scraping personal data, intellectual property, or confidential data.

Is Yahoo a web crawler

Search engines like Google, Bing, and Yahoo use crawlers to properly index downloaded pages so that users can find them faster and more efficiently when searching. Without web crawlers, there would be nothing to tell them that your website has new and fresh content.

What is the difference between a web crawler and a web scraper

Web scraping aims to extract the data on web pages, and web crawling purposes to index and find web pages. Web crawling involves following links permanently based on hyperlinks. In comparison, web scraping implies writing a program computing that can stealthily collect data from several websites.

How do I limit web crawling

By using robots. txt, you can prevent certain parts of your site from being indexed by search engines and crawled by web crawlers. It's important to note that robots. txt does not provide any type of security, but it can help protect sensitive or confidential information from being exposed to the public internet.

Is web crawling legal vs web scraping

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. Startups love it because it's a cheap and powerful way to gather data without the need for partnerships.

How do I get rid of web crawlers

Here are some ways to stop bots from crawling your website:Use Robots.txt. The robots.txt file is a simple way to tell search engines and other bots which pages on your site should not be crawled.Implement CAPTCHAs.Use HTTP Authentication.Block IP Addresses.Use Referrer Spam Blockers.

How do I stop pages from being crawled

You can prevent new content from appearing in results by adding the URL slug to a robots. txt file. Search engines use these files to understand how to index a website's content. If search engines have already indexed your content, you can add a "noindex" meta tag to the content's head HTML.

Does Google automatically crawl

Like all search engines, Google uses an algorithmic crawling process to determine which sites, how often, and what number of pages from each site to crawl. Google doesn't necessarily crawl all the pages it discovers, and the reasons why include the following: The page is blocked from crawling (robots.

Can websites detect web scraping

If fingerprinting is enabled, the system uses browser attributes to help with detecting web scraping. If using fingerprinting with suspicious clients set to alarm and block, the system collects browser attributes and blocks suspicious requests using information obtained by fingerprinting.

Does Facebook crawl websites

The Facebook Crawler crawls the HTML of an app or website that was shared on Facebook via copying and pasting the link or by a Facebook social plugin. The crawler gathers, caches, and displays information about the app or website such as its title, description, and thumbnail image.

What is Google also called web crawler

Google is most definitely a web crawler. They operate a web crawler with the name of Googlebot which searches for new websites, crawls them, and saves them in the massive search engine database.

Is Google a web crawler or web scraper

Google Search is a fully-automated search engine that uses software known as web crawlers that explore the web regularly to find pages to add to our index.

Can a website detect web scraping

If fingerprinting is enabled, the system uses browser attributes to help with detecting web scraping. If using fingerprinting with suspicious clients set to alarm and block, the system collects browser attributes and blocks suspicious requests using information obtained by fingerprinting.