What is a crawler coding

A web crawler, crawler or web spider, is a computer program that's used to search and automatically index website content and other information over the internet. These programs, or bots, are most commonly used to create entries for a search engine index.

How to write a crawler in Java

Web crawler JavaIn the first step, we first pick a URL from the frontier.Fetch the HTML code of that URL.Get the links to the other URLs by parsing the HTML code.Check whether the URL is already crawled before or not.For each extracted URL, verify that whether they agree to be checked(robots.

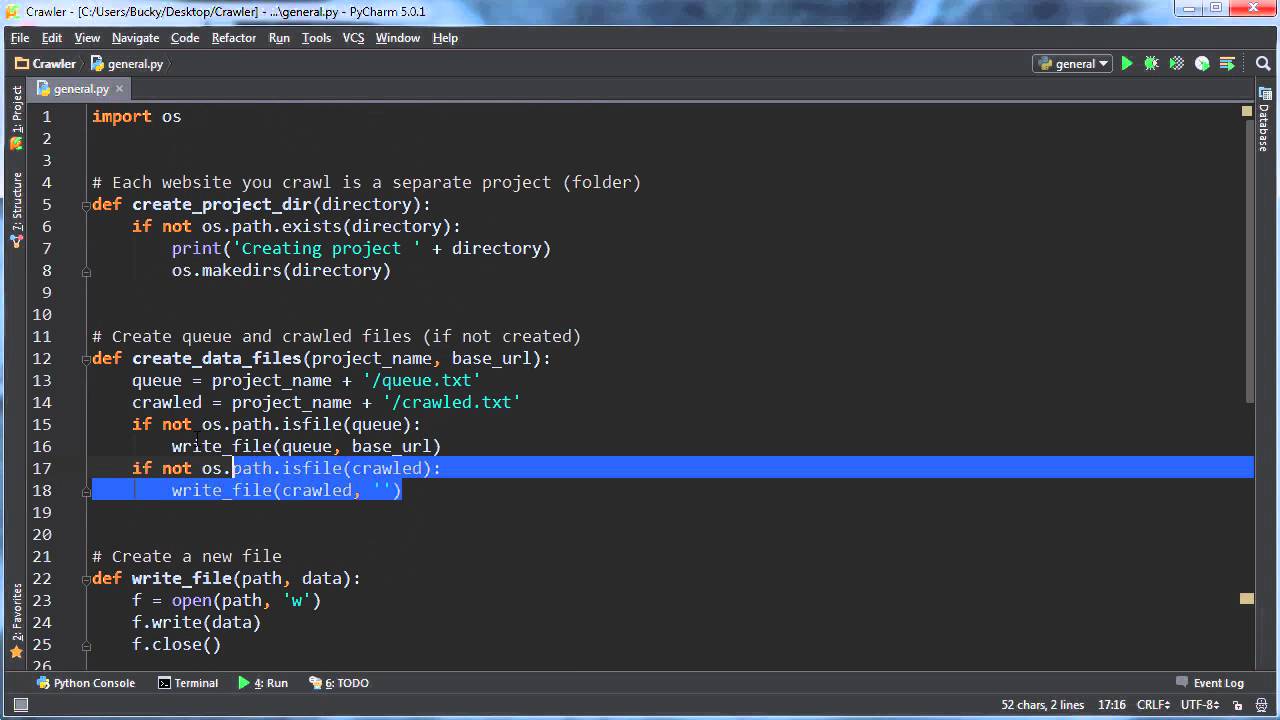

How to make a crawler in Python

Building a Web Crawler using Pythona name for identifying the spider or the crawler, “Wikipedia” in the above example.a start_urls variable containing a list of URLs to begin crawling from.a parse() method which will be used to process the webpage to extract the relevant and necessary content.

Can data crawling be manual

Web crawling can be done manually by going through all of the links on multiple websites and taking notes about which pages contain information relevant to your search. It's more common to use an automated tool to do this though.

Which programming language is best for crawler

Python

Python. Python is mostly known as the best web scraper language. It's more like an all-rounder and can handle most of the web crawling-related processes smoothly.

What language is Google crawler written in

Their purpose is to index all the pages so that they can appear in the search engine results. The crawler tool is also written in C++ and makes use of internal libraries for making it efficient.

How to make a grid in Java code

So let's just pass in one. And then we can add this button to the frame. So frame dot add button one so there's a shortcut you can do that we learned in the last video on flow layout managers.

How to crawl in Java

And it will automatically put you on the floor. So you can crawl I'm not sure if this works on any other version of Minecraft.

How do you write crawlers

Here are the basic steps to build a crawler:Step 1: Add one or several URLs to be visited.Step 2: Pop a link from the URLs to be visited and add it to the Visited URLs thread.Step 3: Fetch the page's content and scrape the data you're interested in with the ScrapingBot API.

How to create a bot in Python

ChatterBot: Build a Chatbot With PythonDemo.Project Overview.Prerequisites.Step 1: Create a Chatbot Using Python ChatterBot.Step 2: Begin Training Your Chatbot.Step 3: Export a WhatsApp Chat.Step 4: Clean Your Chat Export.Step 5: Train Your Chatbot on Custom Data and Start Chatting.

Is data crawling ethical

Crawlers are involved in illegal activities as they make copies of copyrighted material without the owner's permission. Copyright infringement is one of the most important legal issues for search engines that need to be addressed upon.

Is scraping the same as crawling

The short answer is that web scraping is about extracting data from one or more websites. While crawling is about finding or discovering URLs or links on the web.

Can Python be used for web crawler

Web crawling is a powerful technique to collect data from the web by finding all the URLs for one or multiple domains. Python has several popular web crawling libraries and frameworks.

Is C good for web scraping

C++ is a statically-typed programming language that is widely used for developing high-performance applications. This is because it is well known for its speed, efficiency, and memory management capabilities. C++ is a versatile language that comes in handy in a wide range of applications, including web scraping.

What is the best programming language for crawling

Python

Scraping and crawling are I/O-bound tasks, as the crawler spends a lot of time waiting for a response from the crawled website. Python is very well suited to handle these tasks as it supports both multithreading and asynchronous programming patterns.

How to make a 2d grid in Java

To create a two dimensional array in Java, you have to specify the data type of items to be stored in the array, followed by two square brackets and the name of the array. Here's what the syntax looks like: data_type[][] array_name; Let's look at a code example.

How to make a grid in Python

To create a grid, you need to use the .

The grid() method allows you to indicate the row and column positioning in its parameter list. Both row and column start from index 0. For example grid(row=1, column=2) specifies a position on the third column and second row of your frame or window.

How do you build a crawl

Here are three steps to building a crawl space:Trenches are dug below the frost line.Concrete is poured into the trenches and walls are constructed of concrete, either cast-in-place concrete, insulated concrete, or concrete masonry units.Short footings and block walls are laid to support the weight of the house.

How do you make a crawl

Here are the basic steps to build a crawler:Step 1: Add one or several URLs to be visited.Step 2: Pop a link from the URLs to be visited and add it to the Visited URLs thread.Step 3: Fetch the page's content and scrape the data you're interested in with the ScrapingBot API.

How do I make a crawler like Google

Here are the basic steps to build a crawler:Step 1: Add one or several URLs to be visited.Step 2: Pop a link from the URLs to be visited and add it to the Visited URLs thread.Step 3: Fetch the page's content and scrape the data you're interested in with the ScrapingBot API.

How do you crawl data in Python

Put these URLs into a queue; Loop through the queue, read the URLs from the queue one by one, for each URL, crawl the corresponding web page, then repeat the above crawling process; Check whether the stop condition is met. If the stop condition is not set, the crawler will keep crawling until it cannot get a new URL.

How do I code my own bot

How to make your own Discord bot:Turn on “Developer mode” in your Discord account.Click on “Discord API”.In the Developer portal, click on “Applications”.Name the bot and then click “Create”.Go to the “Bot” menu and generate a token using “Add Bot”.Program your bot using the bot token and save the file.

Is Python good for making bots

Python has many benefits that other coding languages don't have, and these make it ideal for programming chatbots. Python's built-in workflow allows for troubleshooting programs while developing your bot code.

Is it legal to scrape emails

Challenge. Check rules/regulations: Scraping publicly available data on the web is legal but you must consider data security and user privacy.

Is it legal to scrape Twitter

Is it legal to scrape Twitter It is legal and allowed to scrape publicly available data from Twitter. It means anything that you can see without logging into the website.