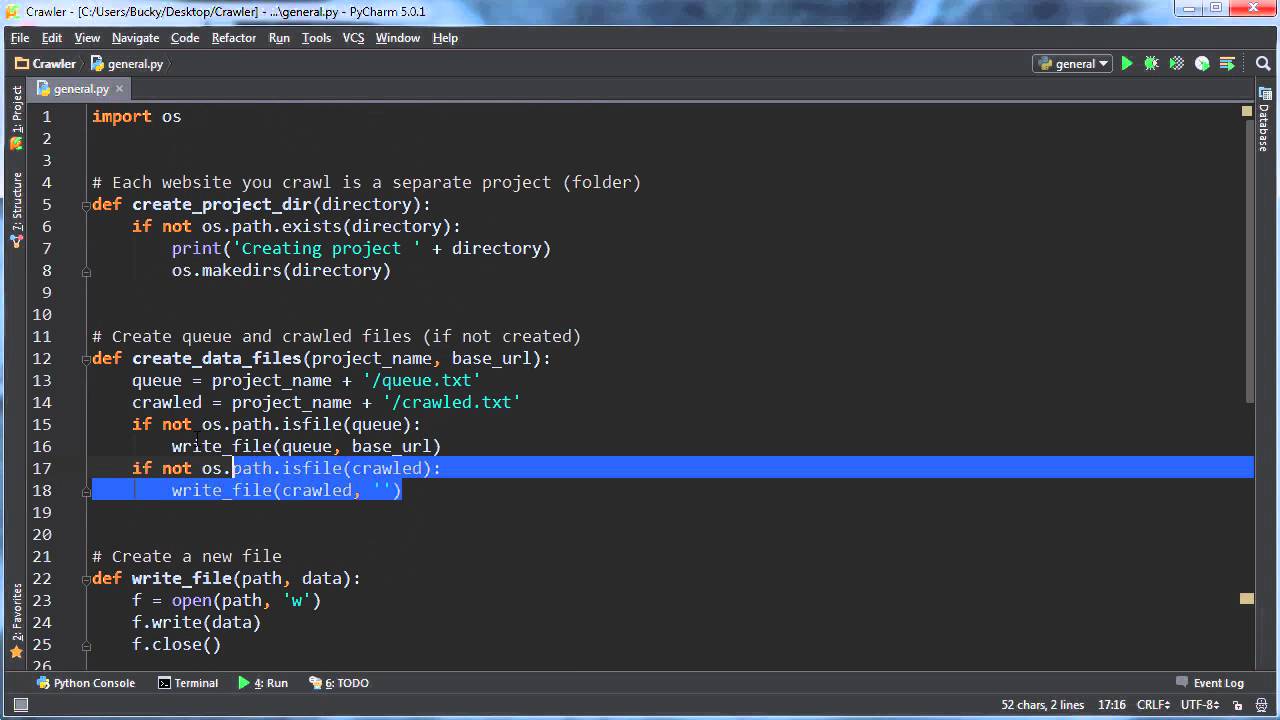

How to implement web crawler in Python

Building a Web Crawler using Pythona name for identifying the spider or the crawler, “Wikipedia” in the above example.a start_urls variable containing a list of URLs to begin crawling from.a parse() method which will be used to process the webpage to extract the relevant and necessary content.

What is the use of web crawler

A web crawler, spider, or search engine bot downloads and indexes content from all over the Internet. The goal of such a bot is to learn what (almost) every webpage on the web is about, so that the information can be retrieved when it's needed.

What is crawler in Python

They achieve this using a bot, called a web crawler. It surfs the internet, collects relevant links, and stores them. This is particularly useful in search engines and even web scraping. It is possible to code this web crawler on your own. All you need is to know some basic prerequisites of Python programming language.

How to use Scrapy in Python

Use Scrapy for Web Scraping in PythonAn introduction to Scrapy and an overview of the course content.Setting up a virtual environment and installing Scrapy.Creating a new Scrapy project.Building your first Scrapy spider to crawl and extract data.

Can Python be used for web crawler

Web crawling is a powerful technique to collect data from the web by finding all the URLs for one or multiple domains. Python has several popular web crawling libraries and frameworks.

How to create a web GUI in Python

Tkinter ProgrammingImport the Tkinter module.Create the GUI application main window.Add one or more of the above-mentioned widgets to the GUI application.Enter the main event loop to take action against each event triggered by the user.

Is it illegal to web crawler

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. Startups love it because it's a cheap and powerful way to gather data without the need for partnerships.

How do I crawl a website URL

The six steps to crawling a website include:Understanding the domain structure.Configuring the URL sources.Running a test crawl.Adding crawl restrictions.Testing your changes.Running your crawl.

How do I crawl multiple websites in Python

The method goes as follows:Create a “for” loop scraping all the href attributes (and so the URLs) for all the pages we want.Clean the data and create a list containing all the URLs collected.Create a new loop that goes over the list of URLs to scrape all the information needed.

How do you crawl a website

The six steps to crawling a website include:Understanding the domain structure.Configuring the URL sources.Running a test crawl.Adding crawl restrictions.Testing your changes.Running your crawl.

Is Scrapy better than BeautifulSoup

Generally, we recommend sticking with BeautifulSoup for smaller or domain-specific scrapers and using Scrapy for medium to big web scraping projects that need more speed and control over the whole scraping process.

Is Scrapy faster than selenium

Scrapy is the one with the best speed since it's asynchronous, built especially for web scraping, and written in Python. However, Beautiful soup and Selenium are inefficient when scraping large amounts of data.

Is Python good for web scraping

Python is an excellent choice for developers for building web scrapers because it includes native libraries designed exclusively for web scraping. Easy to Understand- Reading a Python code is similar to reading an English statement, making Python syntax simple to learn.

Which language is best for web crawler

Python

Python. Python is mostly known as the best web scraper language. It's more like an all-rounder and can handle most of the web crawling-related processes smoothly. Beautiful Soup is one of the most widely used frameworks based on Python that makes scraping using this language such an easy route to take.

Can I make GUI using Python

Python offers multiple options for developing GUI (Graphical User Interface). Out of all the GUI methods, tkinter is the most commonly used method. It is a standard Python interface to the Tk GUI toolkit shipped with Python. Python with tkinter is the fastest and easiest way to create the GUI applications.

Is Python good for GUI

For someone who'd like to design a UI interface of their own, GUI Python is an easy-to-use programing language that can be mastered by almost all levels of users. Now, read on to find more cross-platform frameworks for GUI programming in Python.

Can you get IP banned for web scraping

Having your IP address(es) banned as a web scraper is a pain. Websites blocking your IPs means you won't be able to collect data from them, and so it's important to any one who wants to collect web data at any kind of scale that you understand how to bypass IP Bans.

Is scraping TikTok legal

Scraping publicly available data on the web, including TikTok, is legal as long as it complies with applicable laws and regulations, such as data protection and privacy laws.

Is it legal to crawl a website

Web scraping is completely legal if you scrape data publicly available on the internet. But some kinds of data are protected by international regulations, so be careful scraping personal data, intellectual property, or confidential data.

How do I crawl all pages of a website in Python

Import necessary modules. import requests from bs4 import BeautifulSoup from tqdm import tqdm import json.Write a function for getting the text data from a website url.Write a function for getting all links from one page and store them in a list.Write a function that loops over all the subpages.Create the loop.

Is it legal to crawl data

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. Startups love it because it's a cheap and powerful way to gather data without the need for partnerships.

How do I crawl a website without being blocked in Python

Here are a few quick tips on how to crawl a website without getting blocked:IP Rotation.Set a Real User Agent.Set Other Request Headers.Set Random Intervals In Between Your Requests.Set a Referrer.Use a Headless Browser.Avoid Honeypot Traps.Detect Website Changes.

Can Scrapy replace Selenium

To scrape data from a website that uses Javascript, Selenium is a better approach. However, you can use Scrapy to scrape JavaScript-based websites through the Splash library.

Is BeautifulSoup a web crawler

In Python, BeautifulSoup and Scrapy Crawler library are mostly used for web scraping.

Do hackers use web scraping

A scraping bot can gather user data from social media sites. Then, by scraping sites that contain addresses and other personal information and correlating the results, a hacker could engage in identity crimes like submitting fraudulent credit card applications.