What is crawling in SEO

In the SEO world, Crawling means “following your links”. Indexing is the process of “adding webpages into Google search”. Crawling is the process through which indexing is done. Google crawls through the web pages and index the pages.

What is crawling and indexing in SEO

Crawling is the discovery of pages and links that lead to more pages. Indexing is storing, analyzing, and organizing the content and connections between pages. There are parts of indexing that help inform how a search engine crawls.

How does Google crawler work in SEO

Crawling: Google downloads text, images, and videos from pages it found on the internet with automated programs called crawlers. Indexing: Google analyzes the text, images, and video files on the page, and stores the information in the Google index, which is a large database.

What are spiders and crawlers in SEO

A web crawler, spider, or search engine bot downloads and indexes content from all over the Internet. The goal of such a bot is to learn what (almost) every webpage on the web is about, so that the information can be retrieved when it's needed.

What is an example of crawling a website

Some examples of web crawlers used for search engine indexing include the following: Amazonbot is the Amazon web crawler. Bingbot is Microsoft's search engine crawler for Bing. DuckDuckBot is the crawler for the search engine DuckDuckGo.

What is SEO crawl efficiency

What is crawl efficiency Crawl efficiency is how seamlessly bots are able to crawl all the pages on your site. A clean site structure, reliable servers, errorless sitemaps and robots. txt files, and optimized site speed all improve crawl efficiency.

What is crawling vs indexing vs ranking

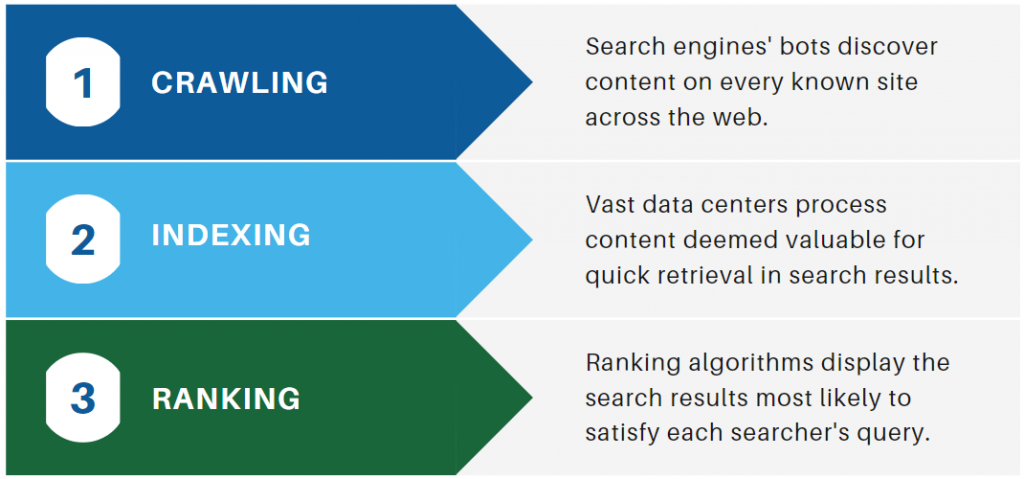

Indexing – Once a page is crawled, search engines add it to their database. For Google, crawled pages are added to the Google Index. Ranking- After indexing, search engines rank pages based on various factors. In fact, Google weighs pages against its 200+ ranking factors before ranking them.

What is the difference between crawling indexing and ranking

In a nutshell, this process involves the following steps: Crawling – Following links to discover the most important pages on the web. Indexing – Storing information about all the retrieved pages for later retrieval. Ranking – Determining what each page is about, and how it should rank for relevant queries.

How does the crawler work

While on a webpage, the crawler stores the copy and descriptive data called meta tags, and then indexes it for the search engine to scan for keywords. This process then decides if the page will show up in search results for a query, and if so, returns a list of indexed webpages in order of importance.

How often does Google crawl for SEO

It's a common question in the SEO community and although crawl rates and index times can vary based on a number of different factors, the average crawl time can be anywhere from 3-days to 4-weeks.

What is the difference between crawler and bot

A web crawler, crawler or web spider, is a computer program that's used to search and automatically index website content and other information over the internet. These programs, or bots, are most commonly used to create entries for a search engine index.

How does a crawler work

A web crawler works by discovering URLs and reviewing and categorizing web pages. Along the way, they find hyperlinks to other webpages and add them to the list of pages to crawl next. Web crawlers are smart and can determine the importance of each web page.

How do you know if a website can be crawled

If the URL is not within a Search Console property that you ownOpen the Rich Results test.Enter the URL of the page or image to test and click Test URL.In the results, expand the "Crawl" section.You should see the following results: Crawl allowed – Should be "Yes".

What is web scrape vs crawl

The short answer. The short answer is that web scraping is about extracting data from one or more websites. While crawling is about finding or discovering URLs or links on the web. Usually, in web data extraction projects, you need to combine crawling and scraping.

What is a good crawl depth

For the most important content on your website, it's best practice to have a crawl depth of 3 and less. Similarly, it's beneficial for your most important pages to be present in your sitemap as this makes it easier for crawlers to locate your content.

How do I optimize my website for crawling

How To Improve Crawling And IndexingImprove Page Loading Speed.Strengthen Internal Link Structure.Submit Your Sitemap To Google.Update Robots.Check Your Canonicalization.Perform A Site Audit.Check For Low-Quality Or Duplicate Content.Eliminate Redirect Chains And Internal Redirects.

Is crawling a ranking factor

The ranking stage includes most of the analysis performed by Google's algorithms. To be considered a ranking factor, something needs to be given weight during the ranking stage. While crawling is required for ranking once met, this prerequisite is not weighted during ranking.

What happens first crawling or indexing

Crawling is the very first step in the process. It is followed by indexing, ranking (pages going through various ranking algorithms) and finally, serving the search results.

What is an example of a crawler

All search engines need to have crawlers, some examples are: Amazonbot is an Amazon web crawler for web content identification and backlink discovery. Baiduspider for Baidu. Bingbot for Bing search engine by Microsoft.

Why do we need crawler

With Crawlers, you can quickly and easily scan your data sources, such as Amazon S3 buckets or relational databases, to create metadata tables that capture the schema and statistics of your data.

How do I know if Google is crawling my website

For a definitive test of whether your URL is appearing, search for the page URL on Google. The "Last crawl" date in the Page availability section shows the date when the page used to generate this information was crawled.

Does Google automatically crawl

Like all search engines, Google uses an algorithmic crawling process to determine which sites, how often, and what number of pages from each site to crawl. Google doesn't necessarily crawl all the pages it discovers, and the reasons why include the following: The page is blocked from crawling (robots.

What is the advantage of crawler

The main advantage of a crawler is that they can move on site and perform lifts with very little set-up, as the crane is stable on its tracks with no outriggers. In addition, a crawler crane is capable of traveling with a load.

What is an example of crawler

Examples of web crawlers

Amazonbot is the Amazon web crawler. Bingbot is Microsoft's search engine crawler for Bing. DuckDuckBot is the crawler for the search engine DuckDuckGo. Googlebot is the crawler for Google's search engine.

How do you crawl a website

The six steps to crawling a website include:Understanding the domain structure.Configuring the URL sources.Running a test crawl.Adding crawl restrictions.Testing your changes.Running your crawl.