What is the difference between ETL and ETL pipeline

An ETL pipeline is a set of processes to extract data from one system, transform it, and load it into a target repository. ETL is an acronym for “Extract, Transform, and Load” and describes the three stages of the process.

What is the difference between data pipeline and ETL pipeline

An ETL Pipeline ends with loading the data into a database or data warehouse. A Data Pipeline doesn't always end with the loading. In a Data Pipeline, the loading can instead activate new processes and flows by triggering webhooks in other systems.

What is ETL data pipelines

An ETL pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. ETL stands for “extract, transform, load,” the three interdependent processes of data integration used to pull data from one database and move it to another.

What is the difference between ETL and ELT

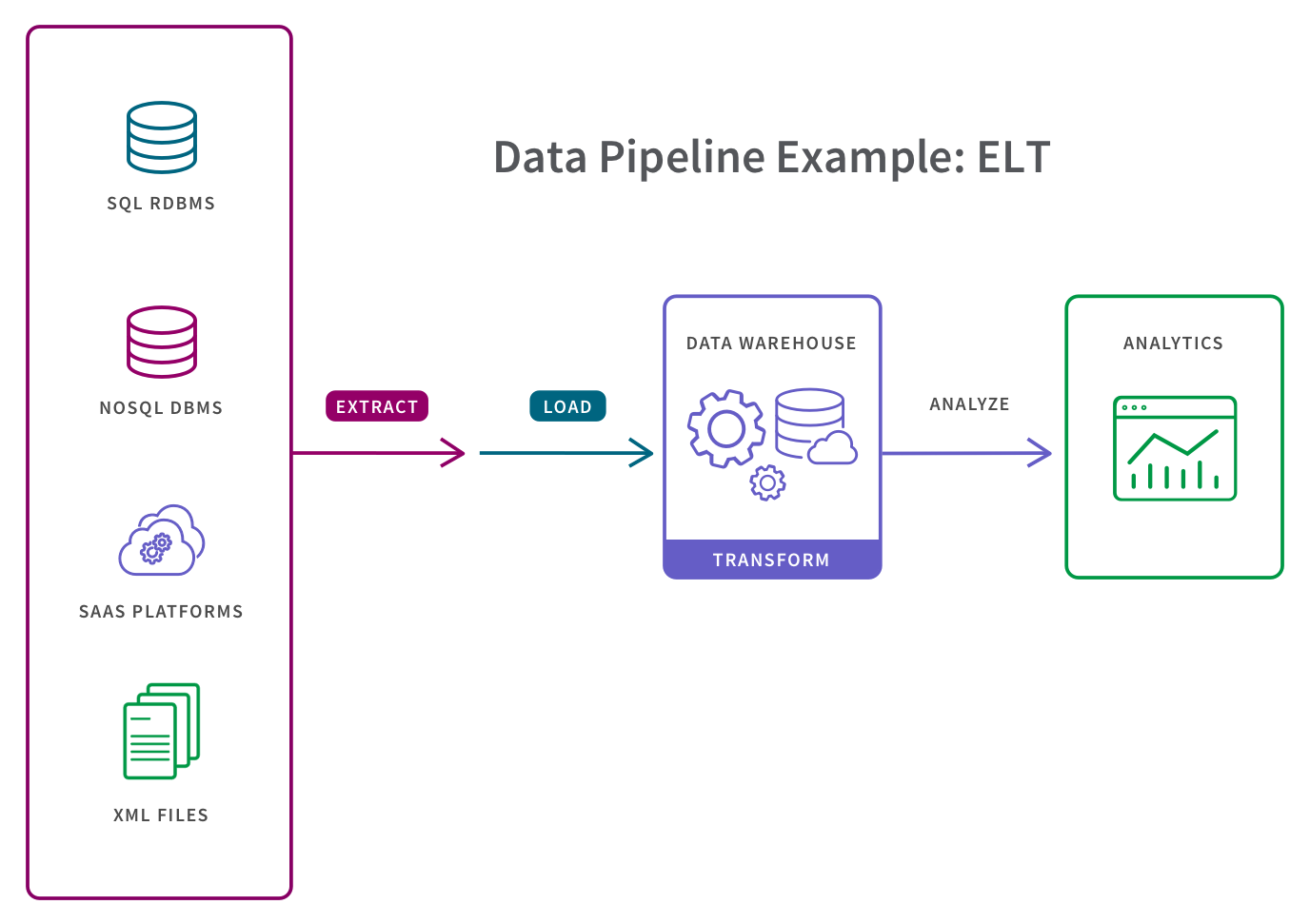

The ETL process transforms data on a secondary processing server. In contrast, the ELT process loads raw data directly into the target data warehouse. Once there, you can transform the data whenever you need it.

What is ETL and ELT data pipelines

ETL and ELT are data integration pipelines that transfer data from multiple sources to a single centralized source and perform some transformation and processing steps to it. The difference between these two is ETL transforms the data before loading, and ELT transforms the data after loading.

How many types of ETL are there

Types of ETL Tools. ETL tools can be grouped into four categories based on their infrastructure and supporting organization or vendor. These categories — enterprise-grade, open-source, cloud-based, and custom ETL tools — are defined below.

What is the difference between pipeline and data flow

Data flows through each pipe from left to right. A "pipeline" is a series of pipes that connect components together so they form a protocol. A protocol may have one or more pipelines, with each pipe numbered sequentially, and executed from top-to-bottom order.

What is the difference between data flow and data pipeline

A Pipeline can run without a Data Flow, but a Data Flow cannot run without a Pipeline. Firstly, dataflow activity need to be executed in the pipeline. So I suspect that you are talking about the copy activity and dataflow activity as both of them are used for transferring data from source to sink.

Is ELT replacing ETL

Whether ELT replaces ETL depends on the use case. While ELT is adopted by businesses that work with big data, ETL is still the method of choice for businesses that process data from on-premises to the cloud. It is obvious that data is expanding and pervasive.

Is AWS data pipeline an ETL tool

AWS Data Pipeline Product Details

As a managed ETL (Extract-Transform-Load) service, AWS Data Pipeline allows you to define data movement and transformations across various AWS services, as well as for on-premises resources.

What is ETL vs ELT in Azure

Extract, load, and transform (ELT) differs from ETL solely in where the transformation takes place. In the ELT pipeline, the transformation occurs in the target data store. Instead of using a separate transformation engine, the processing capabilities of the target data store are used to transform data.

What are the 2 extraction types in ETL

Data extraction is divided into two categories: logical and physical. Logical extraction maintains the relationships and integrity of the data while extracting it from the source. Physical extraction, on the other hand, extracts the raw data as is from the source without considering the relationships.

What are the 5 layers of ETL

The five layers are data source, ETL (Extract-Transform-Load), data warehouse, end user, and metadata layers.

What are the main 3 stages in data pipeline

Data pipelines consist of three essential elements: a source or sources, processing steps, and a destination.

What is the difference between pipeline and data flow in Azure

Pipelines are for process orchestration. Data Flow is for data transformation. In ADF, Data Flows are built on Spark using data that is in Azure (blob, adls, SQL, synapse, cosmosdb). Connectors in pipelines are for copying data and job orchestration.

What is a difference between data flow and pipeline in Azure

Pipelines are for process orchestration. Data Flow is for data transformation. In ADF, Data Flows are built on Spark using data that is in Azure (blob, adls, SQL, synapse, cosmosdb). Connectors in pipelines are for copying data and job orchestration.

Why is ETL better than ELT

ELT leverages the data warehouse to do basic transformations. There is no need for data staging. ETL can help with data privacy and compliance by cleaning sensitive and secure data before loading it into the data warehouse. ETL can perform sophisticated data transformations and can be more cost-effective than ELT.

Is AWS Lambda an ETL tool

AWS Lambda is the platform where we do the programming to perform ETL, but AWS lambda doesn't include most packages/Libraries which are used on a daily basis (Pandas, Requests) and the standard pip install pandas won't work inside AWS lambda.

What are the 3 types of extraction

The three most common types of extractions are: liquid/liquid, liquid/solid, and acid/base (also known as a chemically active extraction). The coffee and tea examples are both of the liquid/solid type in which a compound (caffeine) is isolated from a solid mixture by using a liquid extraction solvent (water).

What are the 4 extraction methods

Extraction methods include solvent extraction, distillation method, pressing and sublimation according to the extraction principle. Solvent extraction is the most widely used method.

What are the 3 layers of ETL

The first layer in ETL is the source layer, and it is the layer where data lands. The second layer is the integration layer where the data is stored after transformation. The third layer is the dimension layer where the actual presentation layer is present.

What are the 4 steps of ETL process

the ETL process: extract, transform and load. Then analyze. Extract from the sources that run your business. Data is extracted from online transaction processing (OLTP) databases, today more commonly known just as 'transactional databases', and other data sources.

What are the 4 stages of pipeline

To the right is a generic pipeline with four stages: fetch, decode, execute and write-back.

What are the 5 pipeline stages

This enables several operations to take place simultaneously, and the processing and memory systems to operate continuously. A five-stage (five clock cycle) ARM state pipeline is used, consisting of Fetch, Decode, Execute, Memory, and Writeback stages.

What is the difference between data flow and pipeline

Data Pipelines are mechanisms where you can define a control flow of execution, whereas Dataflows are for data transformations. You can run one or more Dataflows inside a Pipeline.