What is the difference between ETL process and ETL pipeline

ETL refers to a set of processes extracting data from one system, transforming it, and loading it into a target system. A data pipeline is a more generic term; it refers to any set of processing that moves data from one system to another and may or may not transform it.

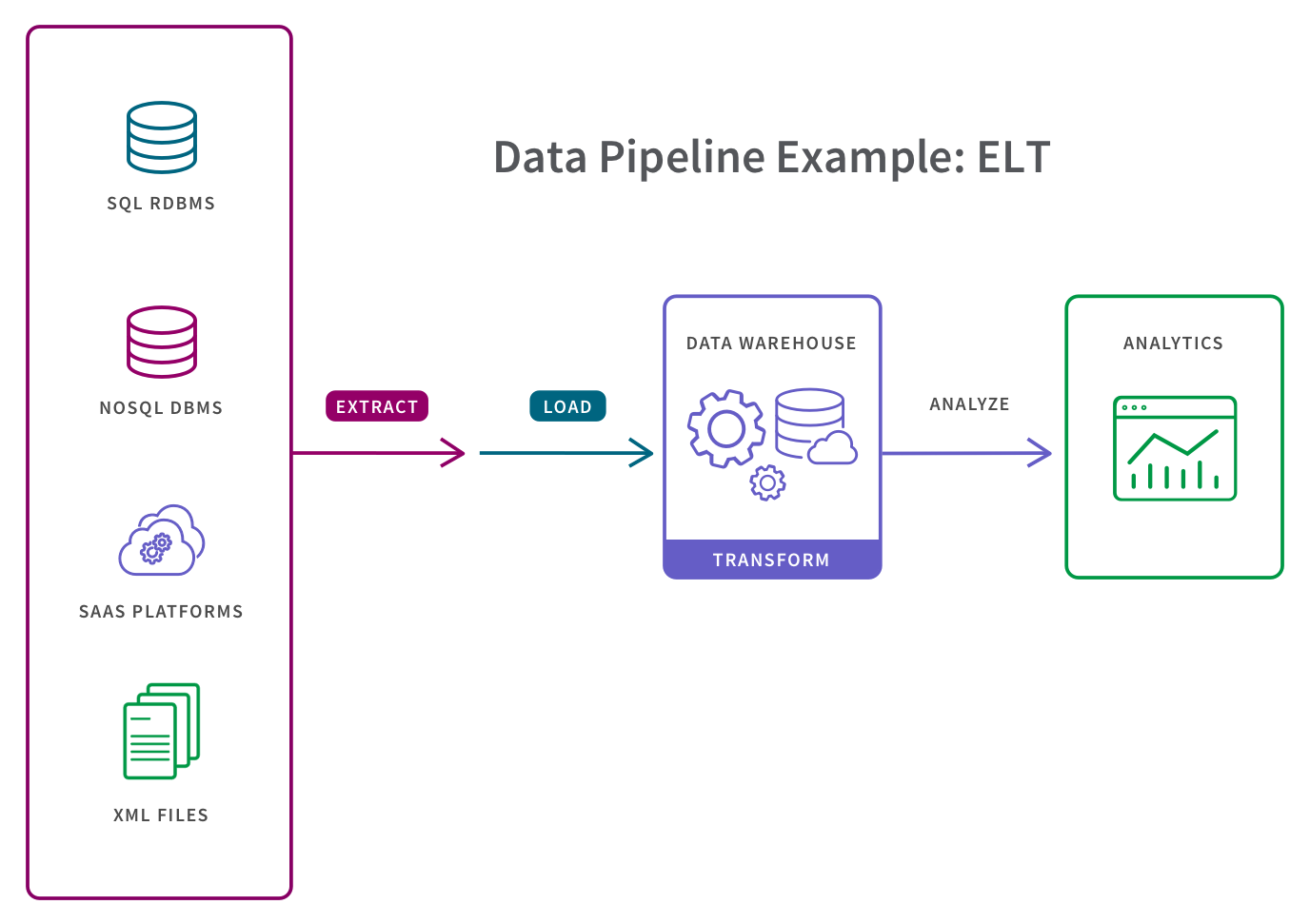

What is the difference between ETL and ELT data pipelines

ETL is a time-intensive process; data is transformed before loading into a destination system. ELT is faster by comparison; data is loaded directly into a destination system, and transformed in-parallel. Performed on secondary server. Best for compute-intensive transformations & pre-cleansing.

What is the main difference between ETL and ELT

Difference between ELT and ETL:

| ELT | ETL |

|---|---|

| Mostly Hadoop or NoSQL database to store data.Rarely RDBMS is used | RDBMS is used exclusively to store data |

| As all components are in one system, loading is done only once | As ETL uses staging area, extra time is required to load the data |

What are ETL processes or data pipelines

The term “ETL” describes a group of processes used to extract data from one system, transform it, and load it into a target system. A more general phrase is “data pipeline,” which refers to any operation that transfers data from one system to another while potentially transforming it as well.

What is the difference between data flow and pipeline

Data flows through each pipe from left to right. A "pipeline" is a series of pipes that connect components together so they form a protocol. A protocol may have one or more pipelines, with each pipe numbered sequentially, and executed from top-to-bottom order.

Is AWS data pipeline an ETL tool

AWS Data Pipeline Product Details

As a managed ETL (Extract-Transform-Load) service, AWS Data Pipeline allows you to define data movement and transformations across various AWS services, as well as for on-premises resources.

When to choose ETL vs ELT

ETL is most appropriate for processing smaller, relational data sets which require complex transformations and have been predetermined as being relevant to the analysis goals. ELT can handle any size or type of data and is well suited for processing both structured and unstructured big data.

Is ELT replacing ETL

Whether ELT replaces ETL depends on the use case. While ELT is adopted by businesses that work with big data, ETL is still the method of choice for businesses that process data from on-premises to the cloud. It is obvious that data is expanding and pervasive.

What is the difference between pipeline and data flow

Data flows through each pipe from left to right. A "pipeline" is a series of pipes that connect components together so they form a protocol. A protocol may have one or more pipelines, with each pipe numbered sequentially, and executed from top-to-bottom order.

What is pipeline vs data pipeline

ETL pipeline includes a series of processes that extracts data from a source, transform it, and load it into the destination system. On the other hand, a data pipeline is a somewhat broader terminology that includes an ETL pipeline as a subset.

What is a difference between data flow and pipeline in Azure

Pipelines are for process orchestration. Data Flow is for data transformation. In ADF, Data Flows are built on Spark using data that is in Azure (blob, adls, SQL, synapse, cosmosdb). Connectors in pipelines are for copying data and job orchestration.

What are the main 3 stages in data pipeline

Data pipelines consist of three essential elements: a source or sources, processing steps, and a destination.

What is the best ETL tool for AWS

Which is the best tool for ETL in AWSFivetran. Fivetran is a cloud-based data integration platform that specializes in automating data pipelines.AWS Glue. AWS Glue is a widely used ETL tool that is managed completely by AWS.AWS Data Pipeline.Stitch Data.Talend.Informatica.Integrate .

Is AWS Lambda an ETL tool

AWS Lambda is the platform where we do the programming to perform ETL, but AWS lambda doesn't include most packages/Libraries which are used on a daily basis (Pandas, Requests) and the standard pip install pandas won't work inside AWS lambda.

Which language is best for ETL pipeline

Python

There are many reasons why organizations choose to set up ETL pipelines with Python. One of the main reasons is that Python is well-suited for dealing with complex schemas and large amounts of big data, making them the better choice for data-driven organizations.

Why ELT is faster than ETL

Since there is only one step, and it only happens one time, loading in the ELT process is faster than ETL. ETL processes that involve an on-premise server require frequent maintenance by IT given their fixed tables, fixed timelines and the requirement to repeatedly select data to load and transform.

What will replace ETL

Top 10 Alternatives to ETL Framework Recently Reviewed By G2 CommunityMuleSoft Anypoint Platform. (572)4.4 out of 5.Cleo Integration Cloud. (462)4.3 out of 5.UiPath: Robotics Process Automation (RPA) (6,242)4.6 out of 5.Microsoft SQL Server. (2,146)4.4 out of 5.Appy Pie. (1,185)4.7 out of 5.Zapier.Supermetrics.Integrately.

What is the difference between data integration and data pipeline

Data pipelines are used to perform data integration. Data integration is the process of bringing together data from multiple sources to provide a complete and accurate dataset for business intelligence (BI), data analysis and other applications and business processes.

What is the ETL end to end pipeline

An ETL pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse. ETL stands for “extract, transform, load,” the three interdependent processes of data integration used to pull data from one database and move it to another.

Is SQL a data pipeline

The SQL query runs a Dataflow pipeline, and the results of the pipeline are written to a BigQuery table. To run a Dataflow SQL job, you can use the Google Cloud console, the Google Cloud CLI installed on a local machine, or Cloud Shell.

What is the difference between dataflow and pipeline in Azure data Factory

An ADF Pipeline allows you to orchestrate and manage dependencies for all the components involved in the data loading process. ADF Mapping Data Flows allow you to perform data row transformations as your data is being processed, from source to target.

What are the 4 stages of pipeline

To the right is a generic pipeline with four stages: fetch, decode, execute and write-back.

What are the 5 pipeline stages

This enables several operations to take place simultaneously, and the processing and memory systems to operate continuously. A five-stage (five clock cycle) ARM state pipeline is used, consisting of Fetch, Decode, Execute, Memory, and Writeback stages.

Does AWS have an ETL tool

AWS Glue Studio offers Visual ETL, Notebook, and code editor interfaces, so users have tools appropriate to their skillsets. With Interactive Sessions, data engineers can explore data as well as author and test jobs using their preferred IDE or notebook.

What is ETL pipeline in Python

An ETL pipeline is the sequence of processes that move data from a source (or several sources) into a database, such as a data warehouse. There are multiple ways to perform ETL. However, Python dominates the ETL space.