What is the difference between IDF and TF-IDF

Term Frequency: TF of a term or word is the number of times the term appears in a document compared to the total number of words in the document. Inverse Document Frequency: IDF of a term reflects the proportion of documents in the corpus that contain the term.

What is the relation between TF and IDF

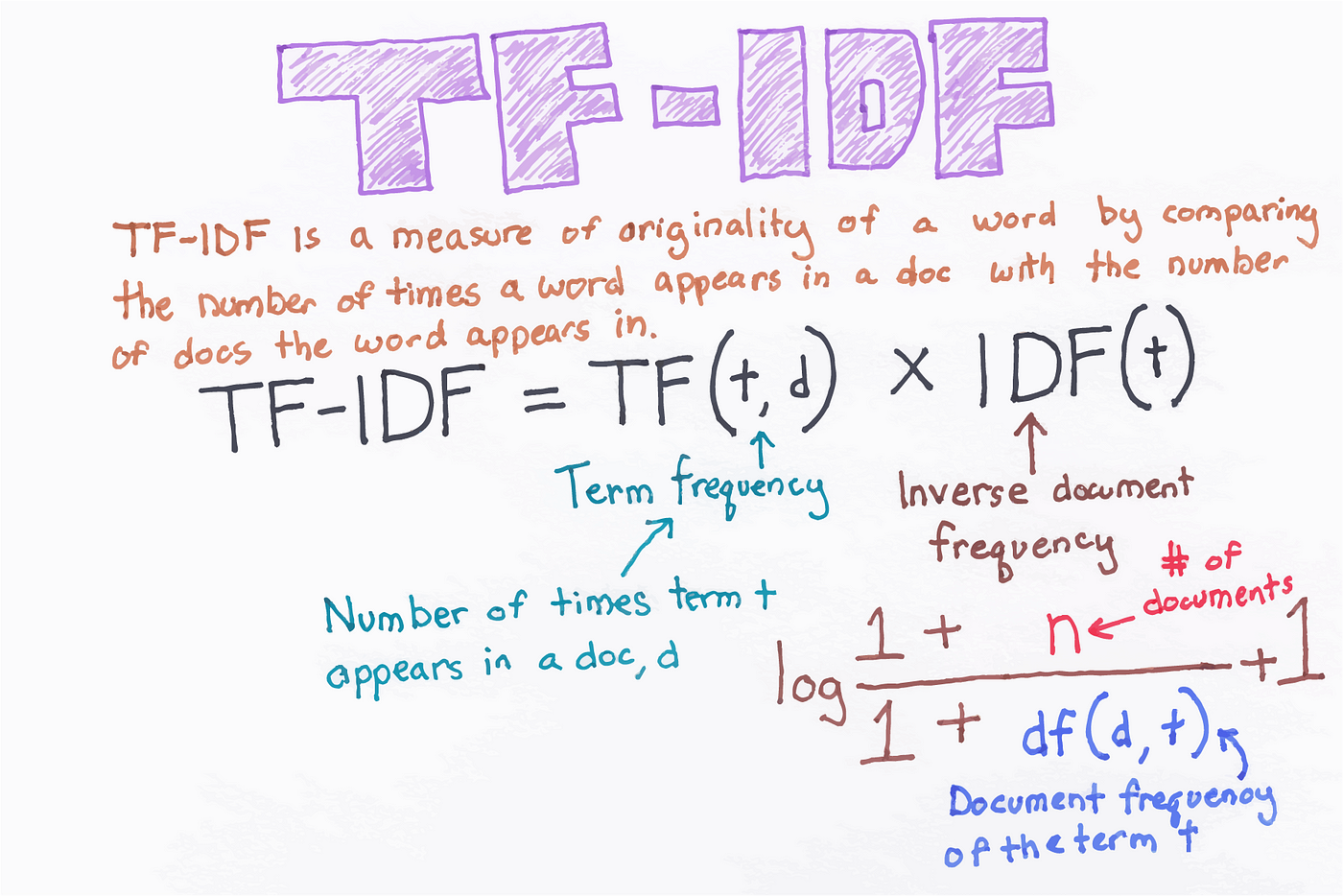

TF-IDF stands for term frequency-inverse document frequency and it is a measure, used in the fields of information retrieval (IR) and machine learning, that can quantify the importance or relevance of string representations (words, phrases, lemmas, etc) in a document amongst a collection of documents (also known as a …

What is the difference between Sklearn TF-IDF and standard TF-IDF

Here are the differences: TF remains the same, while IDF is different:“ Some constant 1” is added to the numerator and denominator of the IDF as if an extra document was seen containing every term in the collection exactly once, which prevents zero divisions” a more empirical approach, while the standard notation …

What is the difference between TF-IDF and Word2vec

TF-IDF model's performance is better than the Word2vec model because the number of data in each emotion class is not balanced and there are several classes that have a small number of data. The number of surprised emotions is a minority of data which has a large difference in the number of other emotions.

What is an example of TF and IDF

okay, for now let's just say that TF answers questions like — how many times is beauty used in that entire document, give me a probability and IDF answers questions like how important is the word beauty in the entire list of documents, is it a common theme in all the documents.

What is better than TF-IDF

You can try using "gensim". I did a similar project with unstructured data. Gensim gave better scores than standard TFIDF. It also ran faster.

What is the difference between TF-IDF and embedding

By having a larger vocabulary the embedding method is likely to assign rules to words that are only rarely seen in training. Conversely, the TF-IDF method had a smaller vocabulary and so rules could only be formed on words which had been seen in many training examples.

Why TF-IDF is used in sentiment analysis

TF-IDF is a product of 'term-frequency' and 'inverse document frequency' statistics. Thus it solves both above-described issues with TF and IDF alone and gives a score value to rank documents based on both. TFIDF score tells the importance of a given word in a given document (when a lot of documents are present).

What is the disadvantage of TF-IDF

It should be noted that tf-idf cannot assist in carrying semantic meaning. It weighs the words and considers them when determining their importance, but it cannot always infer the context of the phrase or determine their significance in that way.

What is the difference between Tfidftransformer and Tfidfvectorizer

With Tfidftransformer you will systematically compute word counts using CountVectorizer and then compute the Inverse Document Frequency (IDF) values and only then compute the Tf-idf scores. With Tfidfvectorizer on the contrary, you will do all three steps at once.

What are two limitations of the TF-IDF representation

However, TF-IDF has several limitations: – It computes document similarity directly in the word-count space, which may be slow for large vocabularies. – It assumes that the counts of different words provide independent evidence of similarity. – It makes no use of semantic similarities between words.

What is the difference between TF-IDF vectorizer and TF-IDF transformer

Both tfidf vectorizer and transformer are same but differ only in Normalization step. tfidf transformer perform that extra step called "Normalization" to make all the values within the 0 to 1 range,where as tfidf vectorizer doesnot perform the Normalization step.