What is the best way to test reliability

4 ways to assess reliability in researchPick a consistent research method.Create a sample group and ensure the members are also consistent.Administer your test using the chosen method.Repeat the exact same testing process one or multiple times with the same sample group.

What are the methods in testing the reliability of a good research instrument

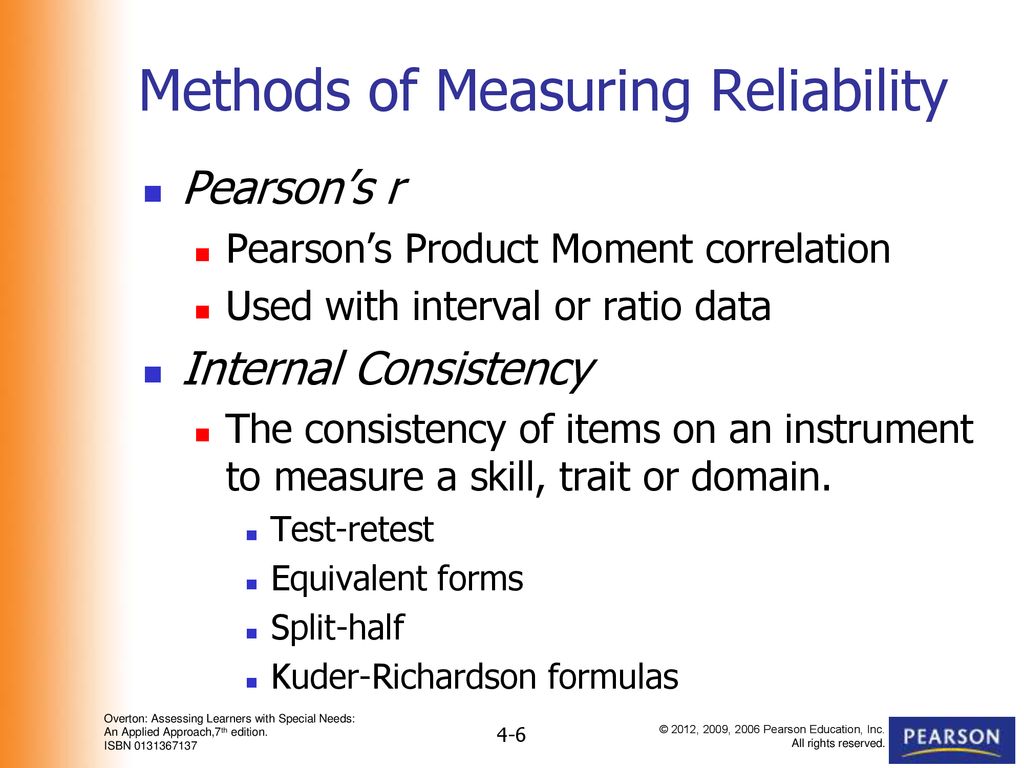

Reliability can be assessed with the test-retest method, alternative form method, internal consistency method, the split-halves method, and inter-rater reliability. Test-retest is a method that administers the same instrument to the same sample at two different points in time, perhaps one year intervals.

Which test is most reliable and valid

Objective Type Tests are highly reliable and valid as they require one-word answer thus minimize the subjective inference and judgment.

What is method of estimating reliability

Test-Retest Method:

To estimate reliability by means of the test-retest method, the same test is administered twice to the same group of pupils with a given time interval between the two administrations of the test.

What are four methods to measure a test reliability

There are several methods for computing test reliability including test-retest reliability, parallel forms reliability, decision consistency, internal consistency, and interrater reliability. For many criterion-referenced tests decision consistency is often an appropriate choice.

What is the Cronbach alpha test for reliability

Cronbach's alpha is a way of assessing reliability by comparing the amount of shared variance, or covariance, among the items making up an instrument to the amount of overall variance. The idea is that if the instrument is reliable, there should be a great deal of covariance among the items relative to the variance.

What are the most common methods for reliability analysis

The most common method for assessing the reliability of survey responses has been to conduct reinterviews with respondents a short interval (one to two weeks) after an initial interview and to estimate relatively simple statistics from these data, such as the gross difference rate (GDR).

What are the methods of measuring reliability and validity in research

Reliable measures are those with low random (chance) errors. Reliability is assessed by one of four methods: retest, alternative-form test, split-halves test, or internal consistency test. Validity is measuring what is intended to be measured. Valid measures are those with low nonrandom (systematic) errors.

What is the most common form of reliability testing

The most common way to measure parallel forms reliability is to produce a large set of questions to evaluate the same thing, then divide these randomly into two question sets. The same group of respondents answers both sets, and you calculate the correlation between the results.

Which test is considered a reliable test

Reliability refers to how dependably or consistently a test measures a characteristic. If a person takes the test again, will he or she get a similar test score, or a much different score A test that yields similar scores for a person who repeats the test is said to measure a characteristic reliably.

Which is the best method for determining reliability and why

The most common way to measure parallel forms reliability is to produce a large set of questions to evaluate the same thing, then divide these randomly into two question sets. The same group of respondents answers both sets, and you calculate the correlation between the results.

What are the two methods of reliability

There are two types of reliability – internal and external reliability. Internal reliability assesses the consistency of results across items within a test. External reliability refers to the extent to which a measure varies from one use to another.

What are the 3 ways of measuring reliability

Reliability refers to the consistency of a measure. Psychologists consider three types of consistency: over time (test-retest reliability), across items (internal consistency), and across different researchers (inter-rater reliability).

Is Cronbach alpha the same as reliability

Cronbach's alpha is a measure of reliability but not validity. It can indicate whether responses are consistent between items (reliability), but it cannot determine whether the items measure the correct concept (validity).

What is the difference between Cronbach alpha and Pearson correlation

Pearson's looks at the correlation between interval data, regardless of what it is. Cronbach Alpha (commonly used in psychology and education) is really geared to whether raters are consistant among themself in the way they code data although it can get at reliability more generally.

What are the methods of measuring validity of a test

Estimating Validity of a Test: 5 Methods | StatisticsCorrelation Coefficient Method: In this method the scores of newly constructed test are correlated with that of criterion scores.Cross-Validation Method:Expectancy Table Method:Item Analysis Method:Method of Inter-Correlation of Items and Factor Analysis:

Is Cronbach alpha test the most common test for reliability

Cronbach's alpha is the most common measure of internal consistency ("reliability"). It is most commonly used when you have multiple Likert questions in a survey/questionnaire that form a scale and you wish to determine if the scale is reliable.

What are the two types of test reliability

Types of ReliabilityInter-Rater or Inter-Observer Reliability: Used to assess the degree to which different raters/observers give consistent estimates of the same phenomenon.Test-Retest Reliability: Used to assess the consistency of a measure from one time to another.

What is the most popular method of estimating reliability

The most common way to measure parallel forms reliability is to produce a large set of questions to evaluate the same thing, then divide these randomly into two question sets. The same group of respondents answers both sets, and you calculate the correlation between the results.

What are the 4 methods of analyzing the reliability of a test

There are several methods for computing test reliability including test-retest reliability, parallel forms reliability, decision consistency, internal consistency, and interrater reliability. For many criterion-referenced tests decision consistency is often an appropriate choice.

Is Cronbach alpha used for reliability

Cronbach's alpha is a way of assessing reliability by comparing the amount of shared variance, or covariance, among the items making up an instrument to the amount of overall variance.

Which is better to use Pearson or Spearman

The difference between the Pearson correlation and the Spearman correlation is that the Pearson is most appropriate for measurements taken from an interval scale, while the Spearman is more appropriate for measurements taken from ordinal scales.

Which is better Pearson or Spearman

One more difference is that Pearson works with raw data values of the variables whereas Spearman works with rank-ordered variables. Now, if we feel that a scatterplot is visually indicating a “might be monotonic, might be linear” relationship, our best bet would be to apply Spearman and not Pearson.

How do you measure test validity and reliability

How are reliability and validity assessed Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory.

Why use Cronbach alpha in reliability

Cronbach's alpha coefficient measures the internal consistency, or reliability, of a set of survey items. Use this statistic to help determine whether a collection of items consistently measures the same characteristic. Cronbach's alpha quantifies the level of agreement on a standardized 0 to 1 scale.