Is there an iPhone app like Google Lens

Apple offers iPhone users a Google Lens-like feature that can recognise things from images. The Visual Look-Up feature works only with certain Apple apps on iPhones and can identify particular objects which include pets, art, landmarks and more.

What Apple app is similar to lens

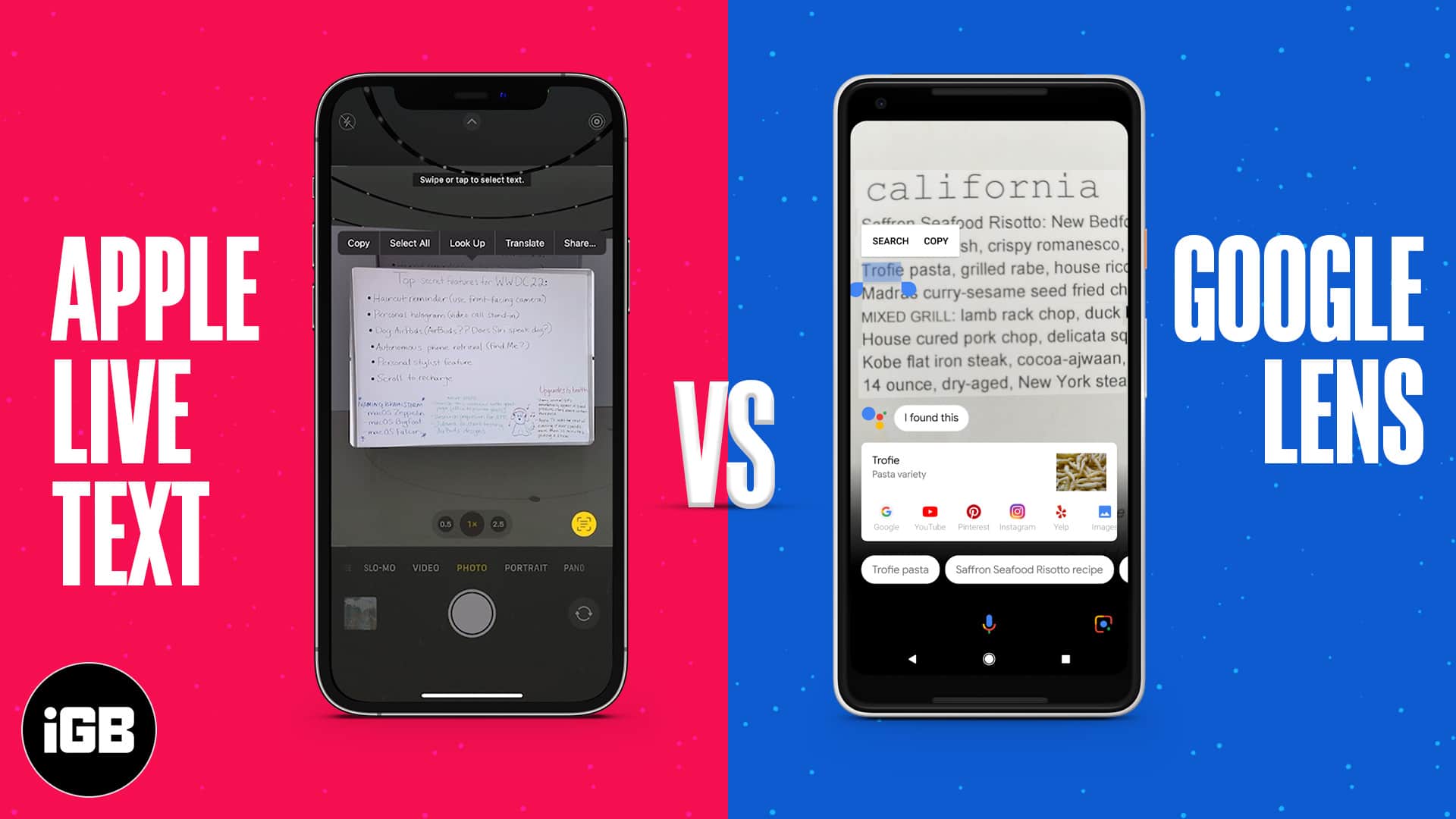

Live Text is essentially Apple's answer to Google Lens. So it offers many similar features. Let's understand more about both.

How do I search by image on iPhone

Search with an image saved on your deviceOn your iPhone and iPad, open the Google app .In the search bar, tap Google Lens .Take or upload a photo to use for your search:Select how you want to search:At the bottom, scroll to find your search results.To refine your search, tap Add to your search.

Is there a better app than Google Lens

CamFind is one of the oldest and most well-known image search apps, and it's available for free both on Android and iOS. The app isn't obviously an exact replica of Google Lens, insofar as it doesn't do AR, but it is, quite simply, the next best thing.

Does Apple have a smart lens

During WWDC, Apple has announced Live Text. It's similar to Google Lens and it can identify text, objects, and numbers in photos.

Does Apple have lens

One of the best Android apps by far, Google Lens is also available on iOS devices, and is incredibly easy to install. Whether you have the best iPad or the best iPhone, you'll find Google Lens works beautifully on both. Alongside the features mentioned above, Google Lens is also fantastic when you're out shopping.

How do I search by image in Apple

Using the Google App

You might think of this as an Android exclusive, but you can get easy access to Google Lens (and Google Assistant) on your iPhone by installing the Google app. To begin a reverse image search, open the Google app and tap the Lens icon which looks like a colorful camera in the search bar.

Does Apple have a image search feature

Using the reverse image search on iPhones can help you find similar images, websites with the exact image or a similar image and even objects identified in the images. This feature can come in handy in a wide range of scenarios, from spotting fake photos to learning the breed of a dog.

What replaced Google Lens

Google Photos has one of the best search capabilities among gallery apps, allowing users to search images with specific objects, places, or people in them.

Is Google Lens only for Android

One of the best Android apps by far, Google Lens is also available on iOS devices, and is incredibly easy to install. Whether you have the best iPad or the best iPhone, you'll find Google Lens works beautifully on both.

Do iPhones have lens features

The native iPhone camera app, as well as the native photos app, come equipped with nine filters. You can apply these while you take a picture or during the editing process.

What is smart eye in iPhone

Smart Eye App is your source for true privacy! * No one can see your private DMs but just you and the person that you sent it to!!!! * End to end encrypted conversations. * Create private group chats. Terms of Use: https://www.apple.com/legal/internet-services/itunes/dev/stdeula/

Does iPhone have a smart lens

With SmartLens, simply point your iPhone to recognize anything around you in real time and receive instant proactive suggestions for what you might want to do next.

Does iOS have image search

Search with an image from search results

On your iPhone or iPad, go to Google Images. Search for an image. Tap the image. At the bottom left, tap Search inside image .

Can iphones do image search

App keep your finger on the screen. And swipe up to go home don't let go yet and then open the Google app drag. It here up in the search box where you see the little plus button.

Can an iPhone identify a picture

When your iPhone recognizes. Something in a photo like a plant animal or Landmark the info button at the bottom of the screen will have a sparkle tap. It. And then tap look up a menu will appear.

Does Apple have Google search

Apple Uses Google Search Engine on Its Devices

Despite the growing competition, Apple chooses Google Search Engine over Bing, DuckDuckGo, and other available options as the default search engine for iPhone and other iOS devices.

Is Google Lens removed

Tap on Apps and Notifications. Now click on the 3 dots located in the upper right corner and tap on show system. Scroll Down and tap on the “Lens” icon. Click on the disable button to disable the google lens on your phone.

Is there an Apple lens

During WWDC, Apple has announced Live Text. It's similar to Google Lens and it can identify text, objects, and numbers in photos.

What is iOS lens

Google Lens for iOS turns objects within a photo, or your camera, into a search. Instead of typing, just send a photo and ask Google to tell you what it is.

Is there eye care on iPhone

Just go to Settings > Display & Brightness > tap on Schedule (do not touch the toggle for Blue Light Reduction) > tap on From Sunset to Sunrise. This will rely on your iPhone or iPad's clock, and will automatically shift to daytime of Night Shift mode when the sun rises or sets.

What is the iPhone lens

Wide – Every iPhone made to date has the Wide lens, including all three iPhone 11 models. This is the most familiar lens and likely will continue to be the most frequently used. Technically speaking, it's a six-element lens with a 1x zoom and an f/1.8 aperture.

How does iPhone search Photos

When you tap Search in the Photos app , you see suggestions for moments, people, places, and categories to help you find what you're looking for, or rediscover an event you forgot about. You can also type a keyword into the search field—for example, a person's name, date, or location—to help you find a specific photo.

How does iPhone identify plants

Then take a photo of the plant or animal you want to find out more about open the photos app and open the photo you just. Took. Now tap the info button at the bottom. Then tap look. Up.

Does Apple search your photos

The photo scanning will happen on the devices, not on the iCloud servers. Apple says it won't be looking at the pictures. Instead, it will convert the image data into code called hashes. The hashes of your photos will be compared with the hashes of known CSAM images in a database.